Federated learning, communicationefficiency, , compression, parameter, server, model update

Vol. 51, No. 1, pp. 55-59, Jan. 2026

10.7840/kics.2026.51.1.55

10.7840/kics.2026.51.1.55

PDF Full-Text

Abstract

Statistics

Cumulative Counts from November, 2022

Multiple requests among the same browser session are counted as one view. If you mouse over a chart, the values of data points will be shown.

Multiple requests among the same browser session are counted as one view. If you mouse over a chart, the values of data points will be shown.

|

|

Cite this article

[IEEE Style]

S. Kwon and S. Park, "Federated learning, communicationefficiency, , compression, parameter, server, model update," The Journal of Korean Institute of Communications and Information Sciences, vol. 51, no. 1, pp. 55-59, 2026. DOI: 10.7840/kics.2026.51.1.55.

[ACM Style]

Sehyeon Kwon and Sangjun Park. 2026. Federated learning, communicationefficiency, , compression, parameter, server, model update. The Journal of Korean Institute of Communications and Information Sciences, 51, 1, (2026), 55-59. DOI: 10.7840/kics.2026.51.1.55.

[KICS Style]

Sehyeon Kwon and Sangjun Park, "Federated learning, communicationefficiency, , compression, parameter, server, model update," The Journal of Korean Institute of Communications and Information Sciences, vol. 51, no. 1, pp. 55-59, 1. 2026. (https://doi.org/10.7840/kics.2026.51.1.55)

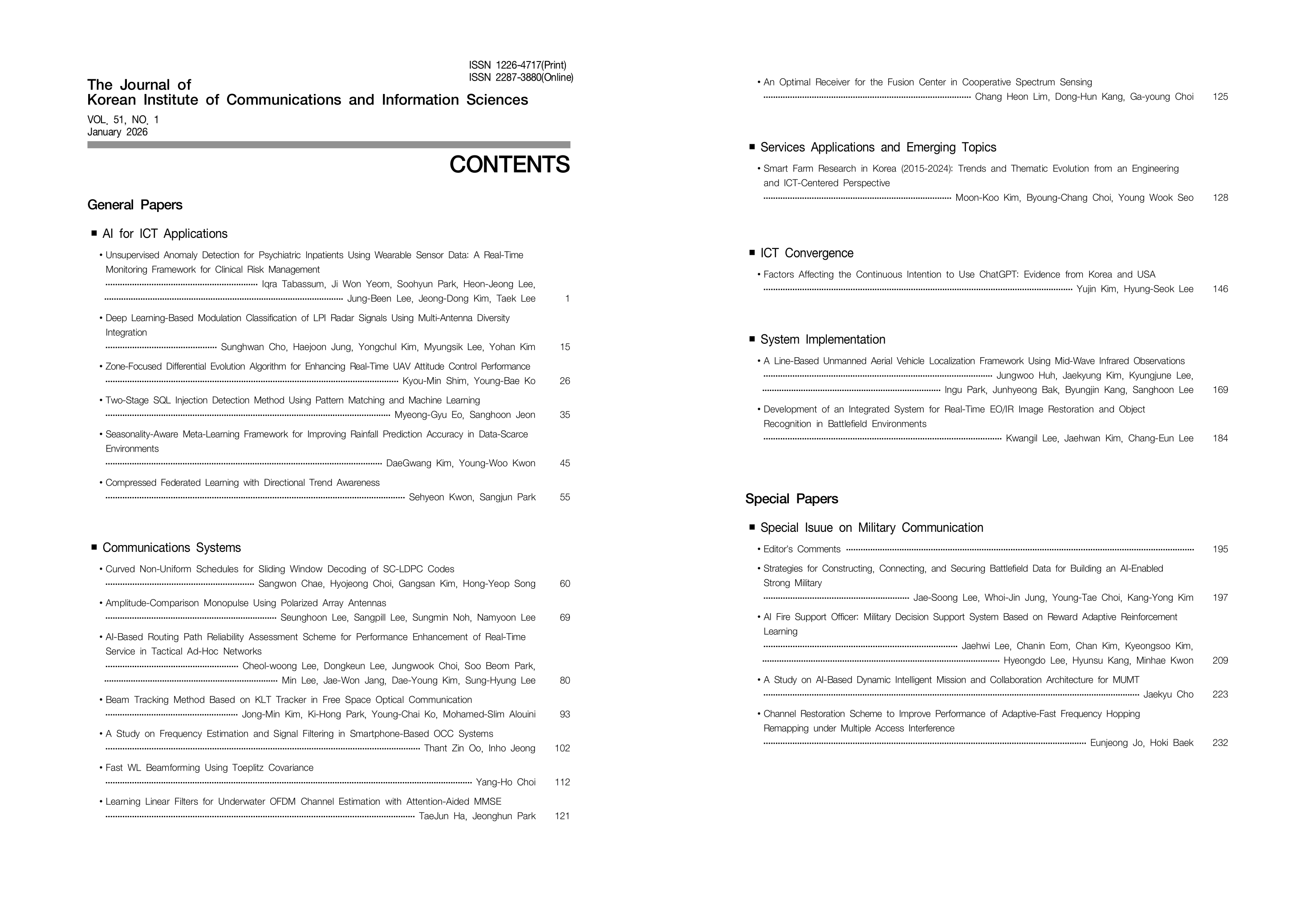

Vol. 51, No. 1 Index