Analyzing Quantized Small Language Models for Efficient Edge Deployment

Vol. 50, No. 9, pp. 1364-1380, Sep. 2025

10.7840/kics.2025.50.9.1364

10.7840/kics.2025.50.9.1364

PDF Full-Text

Abstract

Statistics

Cumulative Counts from November, 2022

Multiple requests among the same browser session are counted as one view. If you mouse over a chart, the values of data points will be shown.

Multiple requests among the same browser session are counted as one view. If you mouse over a chart, the values of data points will be shown.

|

|

Cite this article

[IEEE Style]

S. Jang, S. Yang, C. Choi, "Analyzing Quantized Small Language Models for Efficient Edge Deployment," The Journal of Korean Institute of Communications and Information Sciences, vol. 50, no. 9, pp. 1364-1380, 2025. DOI: 10.7840/kics.2025.50.9.1364.

[ACM Style]

Sooyoung Jang, Seungho Yang, and Changbeom Choi. 2025. Analyzing Quantized Small Language Models for Efficient Edge Deployment. The Journal of Korean Institute of Communications and Information Sciences, 50, 9, (2025), 1364-1380. DOI: 10.7840/kics.2025.50.9.1364.

[KICS Style]

Sooyoung Jang, Seungho Yang, Changbeom Choi, "Analyzing Quantized Small Language Models for Efficient Edge Deployment," The Journal of Korean Institute of Communications and Information Sciences, vol. 50, no. 9, pp. 1364-1380, 9. 2025. (https://doi.org/10.7840/kics.2025.50.9.1364)

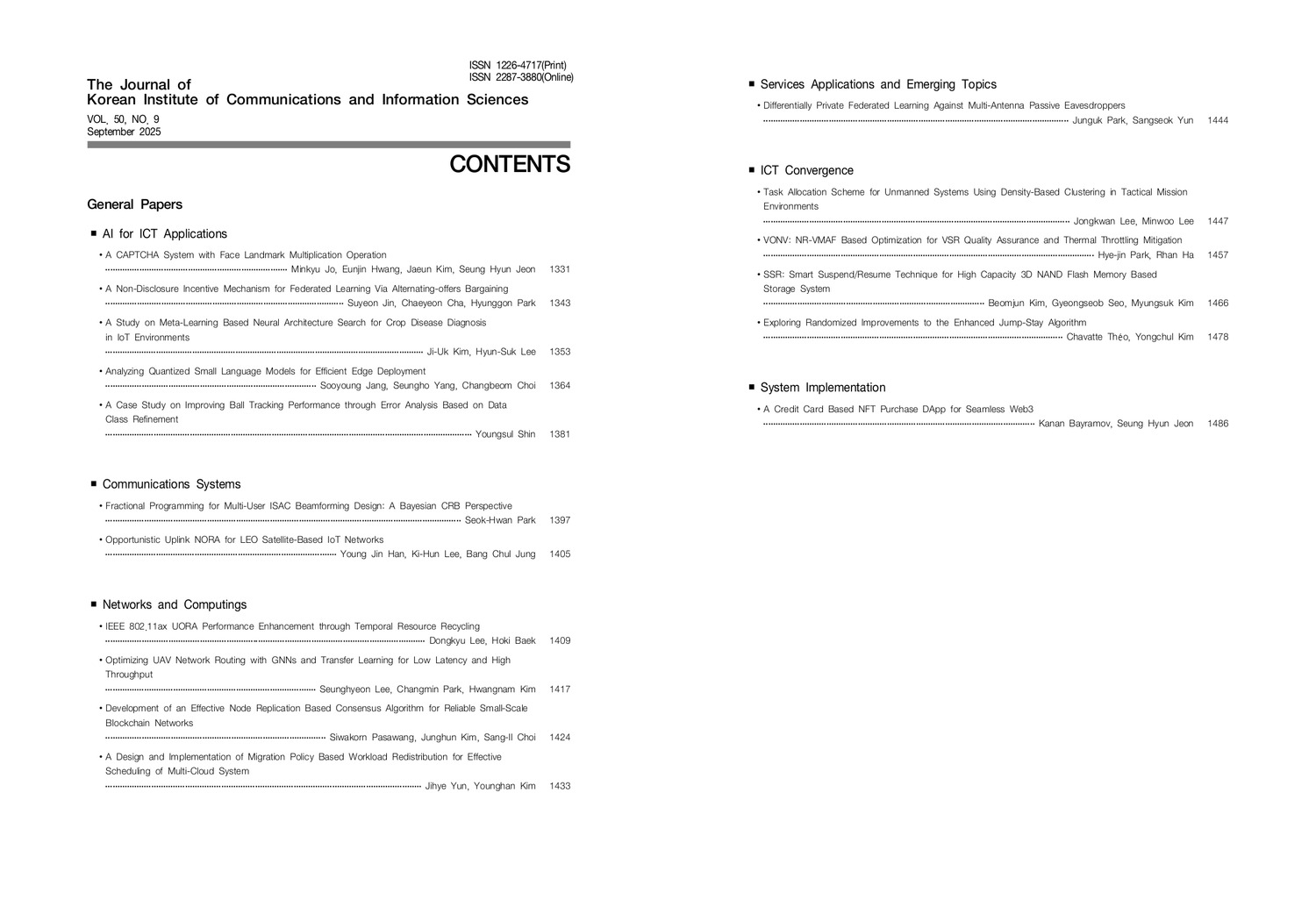

Vol. 50, No. 9 Index