IndexFiguresTables |

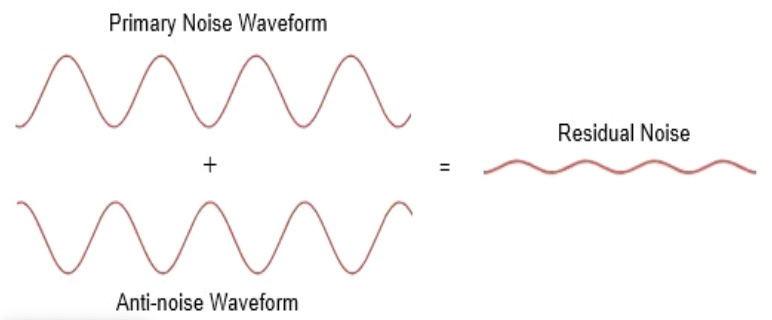

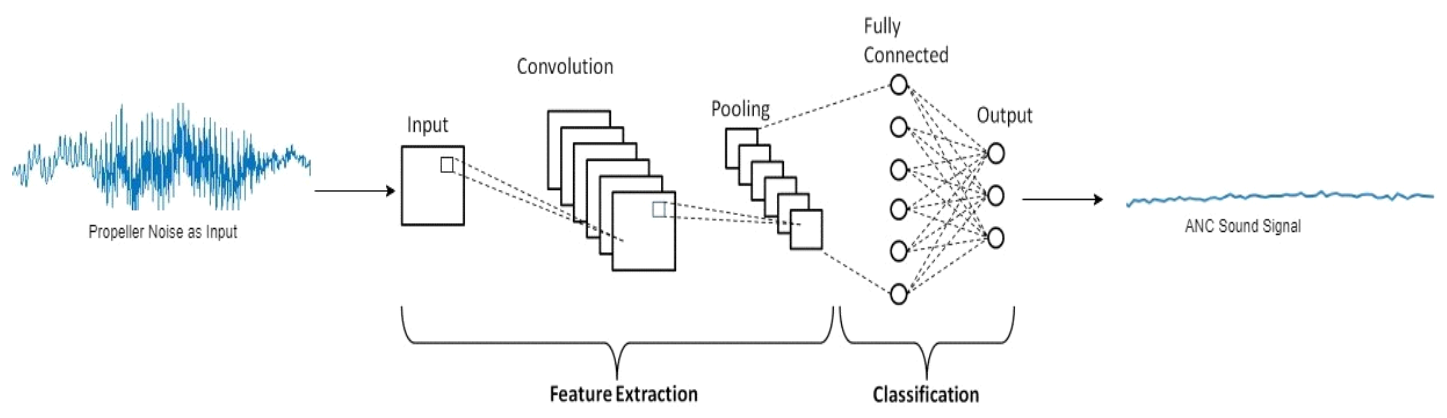

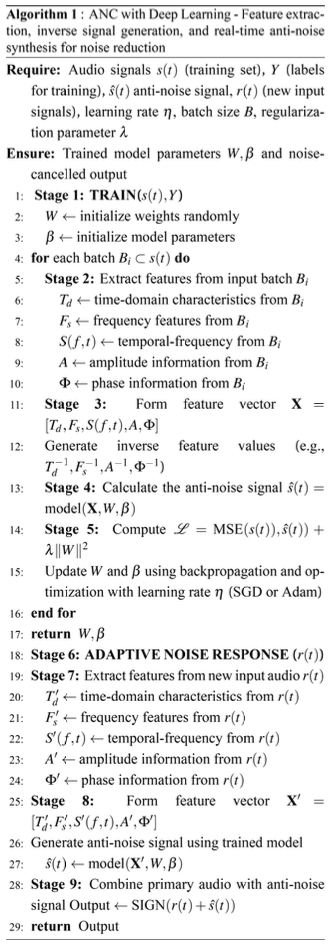

Faisal Ayub Khan♦ and Soo Young Shin°Suppressing the Acoustic Effects of UAV Propellers through Deep Learning-Based Active Noise CancellationAbstract: This study presents a deep learning-based Active Noise Cancellation (ANC) system for reducing UAV propeller noise using a Convolutional Neural Network (CNN) model. The proposed system effectively minimizes noise in real-time by extracting key audio features such as amplitude, phase, and frequency components, generating and calculating inverse feature values to construct precise anti-noise signals. This approach enables destructive interference, significantly reducing the propeller noise. The model achieved high-performance metrics, including 94.5% accuracy, 93.2% precision, 96.1% recall, and a loss value of 0.115, demonstrating its efficacy in noise cancellation. Deployed on an Nvidia Jetson NX, the ANC system integrates high-quality microphones and strategically placed speakers on a UAV platform, allowing for real- time noise analysis and anti-noise generation. Indoor and outdoor tests validated a substantial reduction in propeller noise up to 36 dB, highlighting the model’ s robustness and potential for quieter UAV operation in noise-sensitive settings. Keywords: Deep Learning , Unmanned Aerial Vehicles (UAVs) , Convolutional Neural Network (CNN) , Adaptive ANC , Neural Network (NN) , Real-time Noise Mapping Ⅰ . IntroductionUnmanned Aerial Vehicles (UAVs) are finding ap- plications across diverse sectors, including agriculture, surveillance, emergency response, and delivery services. Their ability to perform tasks autonomously in hard-to-reach areas has transformed industries, im- proving operational efficiency and reducing human risk[1]. However, UAVs face a significant hurdle in terms of their acceptability in urban and densely popu- lated areas due to the noise generated by their pro- pellers[2,3]. This high-frequency, intrusive noise can be disruptive, especially in settings where quiet is cru- cial[2], such as residential neighborhoods, wildlife monitoring sites, or medical facilities. Building upon our prior work on noise reduction for UAVs[4], where we developed an initial framework to mitigate propeller noise, this study advances the approach by enhancing model effectiveness, alongside hardware implementation to improve noise cancella- tion performance. As mentioned before Active Noise Cancellation (ANC) techniques can tackle the per- vasive issues of noise pollution associated with UAVs[5] by employing sophisticated algorithms and sensor arrays to detect and counteract the noise gen- erated by propulsion systems and aerodynamic forces. ANC operates on the principle of “destructive interfer- ence” where the systems capture sound through multi- ple sensors, which then process the data to create an- ti-noise signals that are equal in amplitude but pre- cisely 180° out of phase[6]. As the two signals con- verge, they undergo destructive interference, effec- tively nullifying each other’ s effects. Fig. 1 illustrates this fundamental concept, displaying how the primary noise and antinoise interact to achieve a residual noise level that is substantially lower than the original. This noise reduction requires precise calibration: the an- ti-noise must match the primary noise in both magni- tude and phase for optimal cancellation[7]. In UAV applications, the effectiveness of ANC be- comes even more impactful when combined with deep learning (DL) models[8]. By training deep neural net works on extensive datasets of UAV noise profiles, the system learns to recognize specific noise sig- natures generated by UAV propellers and aerody- namic forces[7]. This training enables the neural net- works to extract detailed audio features, such as fre- quency components, amplitude variations, and noise directionality, which contribute to a more precise an- ti-noise generation process[9]. Additionally, the adap- tive capabilities of deep learning enhance the ANC system’ s response to changing conditions, such as varying altitudes, wind patterns, and speed, which all impact noise levels. Using an adaptive approach, the system continuously adjusts anti-noise signals to match the dynamic noise sources, ensuring higher can- cellation effectiveness and faster response times com- pared to traditional ANC systems. This data-driven method allows the system to learn and adapt to new noise patterns encountered during operation, improv- Ⅱ. Literature ReviewWhile multiple studies have investigated traditional Active Noise Cancellation (ANC) systems, these tech- niques generally rely on adaptive filtering methods that process incoming signals to cancel out noise. One of the most widely used approaches is the Least Mean Square (LMS) algorithm, which adapts the filter co- efficients based on the error signal. However, LMS has limitations, such as slow convergence rates and its inability to handle non-stationary noise signals ef- fectively[11,12]. Filtered-X LMS (FXLMS) improves upon LMS by incorporating the reference noise signal directly into the update process, which helps with sta- bility in certain applications. Still, FXLMS struggles with highly dynamic noise environments, such as those encountered in UAV applications, and is compu- tationally expensive when dealing with multiple fre- quencies or complex interference patterns[13,14]. Other traditional ANC techniques, such as the Wiener fil- ter[15] and adaptive notch filtering[16], also exhibit per- formance limitations in non-stationary environments. While the Wiener filter can be highly effective in sta- tionary noise environments, it suffers from poor adapt- ability in rapidly changing conditions, like those en- countered in UAV applications[17]. Similarly, adaptive notch filtering performs well in filtering out specific frequency bands but cannot adapt to complex and varying noise patterns that are typical in real-time. The limitations of these traditional ANC methods underscore the need for more advanced techniques ca- pable of handling complex, time-varying, and multi- frequency noise signals. To address these challenges, our proposed system introduces a deep learning-based approach, utilizing Convolutional Neural Networks (CNNs) for noise cancellation. CNNs are adaptive at extracting complex features from high-dimensional in- puts such as spectrograms, which represent time- frequency information from noisy audio signals. By learning the intricate patterns within the noise data, CNNs can generate highly accurate anti-noise signals tailored to counteract the noise in real-time[18,19]. Unlike traditional ANC methods, which rely on pre- defined models or manual feature extraction, CNN-based ANC systems adapt to new, unseen noise patterns through training on large datasets. This flexi- bility allows the system to continuously learn and im- prove its performance, making it more effective in dy- namic environments[18]. Additionally, the reaction time of CNN-based ANC systems is significantly fast- er compared to traditional methods, as CNNs can process and output noise-canceling signals almost in- stantly once trained, reducing the delay commonly ob- served in adaptive filtering methods like LMS and FXLMS[20]. In contrast to traditional ANC systems, CNNs can handle non-stationary and multi-frequency noise effi- ciently, making them ideal for applications such as UAVs, where the noise environment is highly dynam- ic and complex[21]. Furthermore, as mentioned in [18], the computational efficiency of deep learning ap- proaches, particularly with advancements in hardware acceleration (e.g., GPUs), allow real-time operation even in complex scenarios. This study focuses on ex- tracting relevant features from the UAV propeller noise, such as amplitude, frequency, and phase in- formation through CNN. Afterward, extracted features are then used to generate inverse values, which are translated into an anti-noise signal. This signal, when played back through speakers directed toward the pro- peller, results in a reduction of the primary noise due to the phenomenon of destructive interference. Deep Learning models, particularly Convolutional Neural Networks (CNNs), are highly effective for complex noise cancellation tasks, including reducing propeller noise, which presents unique spectral pat- terns and time-variant characteristics that challenge traditional signal processing methods. In these models, CNNs analyze time-frequency representations like spectrograms derived from audio recordings[22], where each frame serves as input to the network. Through a series of convolutional and pooling layers, CNN-based ANC systems extract essential audio fea- tures-such as amplitude, frequency spectrum, and phase information-by first converting the raw audio into a spectrogram that captures both temporal and frequency data[23]. Convolutional layers apply learn- able filters across this spectrogram to detect relevant patterns at different scales, while pooling layers down- sample feature maps, preserving important character- istics and optimizing computational efficiency[24]. Deeper layers within the network recognize complex noise patterns, and fully connected layers map these features to antinoise signals, effectively enabling tar- geted noise cancellation. Once extracted, these features pass to fully con- nected layers, which produce an anti-noise signal tail- ored to counteract the original propeller noise, thus reducing the overall sound level. During training, the CNN minimizes the difference between the generated cancellation signal and the primary propeller noise, learning how to cancel specific noise characteristics effectively[22]. The model’ s generalization to various noise scenarios is enhanced by regularization techni- ques and extensive dataset training, equipping the sys- tem to adapt to new audio environments and diverse noise types, making it a robust solution for dynamic noise reduction in UAV applications. 2.1 Proposed ModelThe proposed system model, as illustrated in Fig. 2, outlines a Deep Learning-based Active Noise Cancellation (ANC) architecture designed to mitigate UAV propeller noise. This model uses a Convolutional Neural Network (CNN) framework to process the propeller noise signal, [TeX:] $$\begin{equation} s(t) \end{equation}$$, by extracting crucial features that allow for the precise generation of an anti-noise signal, [TeX:] $$\begin{equation} \hat{s}(t) \end{equation}$$, which destructively inter- feres with the original noise giving residual noise as a result. The system begins by capturing the propeller noise as an input, represented in the time domain as a con- tinuous audio waveform. This input signal [TeX:] $$\begin{equation} s(t) \end{equation}$$ is then converted into a time-frequency representation, specif- ically a spectrogram, denoted as [TeX:] $$\begin{equation} X(f, t) \end{equation}$$, to capture both temporal and spectral characteristics. The spectrogram [TeX:] $$\begin{equation} X(f, t) \end{equation}$$, where fdenotes frequency and t denotes time, serves as the initial input to the CNN. Within the CNN , various audio features are extracted to facilitate ef- fective ANC. These features include time-domain characteristics [TeX:] $$\begin{equation} (T_d) \end{equation}$$, which capture the temporal struc- ture of the signal and provide insight into the perio- dicity and transient properties of the propeller noise. Frequency features [TeX:] $$\begin{equation} (F_s) \end{equation}$$, derived from the spectro- gram, reveal dominant frequency components crucial for identifying repetitive noise patterns from the pro- peller[24]. Amplitude information [TeX:] $$\begin{equation} (A) \end{equation}$$ represents the in- tensity of the signal at different points, which helps in determining the energy profile of the noise. Phase information [TeX:] $$\begin{equation} (\Phi) \end{equation}$$ is also captured, allowing the model to synchronize the anti-noise with the primary noise accurately. Furthermore, the temporal-frequency rep- resentation [TeX:] $$\begin{equation} S(f,t) \end{equation}$$ provides a holistic view of how noise characteristics evolve over time, enabling adap- tation to dynamic noise patterns. Each frame of the spectrogram [TeX:] $$\begin{equation} X(f, t) \end{equation}$$ is fed into the CNN, which consists of convolutional layers fol- lowed by pooling layers. The convolutional layers ap- ply a set of filters, represented by weights [TeX:] $$\begin{equation} W_c \end{equation}$$ , across the spectrogram, extracting localized patterns and fea- tures at various scales[7]. Pooling layers downsample the feature maps, reducing dimensionality while pre- serving essential characteristics. The features extracted in this way are then passed to fully connected layers, which act as a classification and decision-making mechanism[25]. These layers map the features to an anti-noise signal [TeX:] $$\begin{equation} \hat{s}(t) \end{equation}$$, carefully cali- brated to mirror the amplitude and phase of the pri- mary noise but precisely 180° out of phase. This anti- noise signal achieves destructive interference with the primary noise [TeX:] $$\begin{equation} s(t) \end{equation}$$, significantly reducing the overall sound level. The anti-noise [TeX:] $$\begin{equation} \hat{s}(t) \end{equation}$$ is produced by com- puting the inverse values of critical features, such as amplitude [TeX:] $$\begin{equation} A^{-1} \end{equation}$$, frequency [TeX:] $$\begin{equation} F_s^{-1} \end{equation}$$, and phase [TeX:] $$\begin{equation} \Phi^{-1} \end{equation}$$. These values are combined through the fully connected lay- ers to form a continuous anti-noise signal that effec- tively neutralizes the propeller noise when superimposed. This dynamic and adaptive approach enables precise and efficient noise cancellation, en- hancing operational stealth and minimizing the envi- ronmental impact of UAVs[8]. The algorithm detailed in the following sections outlines the step-by-step process for training the model, emphasizing the sys- tematic feature extraction and integration that form the foundation of deep learning-based ANC. In summary, the proposed model begins with the UAV propeller noise [TeX:] $$\begin{equation} s(t) \end{equation}$$, transforms it into a spectro- gram [TeX:] $$\begin{equation} X(f, t) \end{equation}$$ for feature extraction, and processes this data through convolutional and pooling layers to iden- tify relevant features, including time-domain charac- teristics, frequency, amplitude, phase, and tempo- ral-frequency representation [TeX:] $$\begin{equation} S(f, t) \end{equation}$$. Fully connected layers utilize these features to generate an anti-noise signal [TeX:] $$\begin{equation} \hat{s}(t) \end{equation}$$, which aligns in magnitude but opposes the primary noise in phase. The output anti-noise signal, when combined with the original propeller noise, re- duces the perceived sound through destructive interference. This model effectively integrates deep learning with traditional ANC principles, ensuring precision and adaptability in complex and dynamic UAV environments. The design ensures that the mod- el can adjust to varying noise characteristics, making it a robust solution for UAV noise reduction. 2.2 AlgorithmThe proposed algorithm 1 for Active Noise Cancel- lation (ANC) leverages a Deep Learning approach to effectively reduce UAV propeller noise. Given an in- put audio signal [TeX:] $$\begin{equation} s(t) \end{equation}$$, the system extracts essential fea- tures for noise cancellation, including time-domain characteristics [TeX:] $$\begin{equation} T_d \end{equation}$$ , frequency components [TeX:] $$\begin{equation} F_s \end{equation}$$ , ampli- tude [TeX:] $$\begin{equation} A \end{equation}$$ , phase [TeX:] $$\begin{equation} \Phi \end{equation}$$, and a temporal-frequency representa- tion [TeX:] $$\begin{equation} S(f,t) \end{equation}$$. These features are then combined into a feature vector [TeX:] $$\begin{equation} \mathrm{X}=\left[T_d, F_s, S(f, t), A, \Phi\right] \end{equation}$$, which serves as input to a Convolutional Neural Network (CNN) model. To produce the anti-noise signal, the model computes the inverse of these features, such as [TeX:] $$\begin{equation} T_d^{-1} \end{equation}$$, [TeX:] $$\begin{equation} F_s^{-1} \end{equation}$$, [TeX:] $$\begin{equation} A^{-1} \end{equation}$$, and [TeX:] $$\begin{equation} \Phi^{-1} \end{equation}$$, creating a feature set that counter- acts the primary noise. During training, the model op- timizes its parameters [TeX:] $$\begin{equation} W \end{equation}$$ and [TeX:] $$\begin{equation} \beta \end{equation}$$ which are crucial for the model’s learning process. [TeX:] $$\begin{equation} W \end{equation}$$ represents the weights in the convolutional layers, which are learned during training to detect patterns and features in the input audio spectrograms. These weights enable the model to capture the noise characteristics of the UAV pro- peller sound. [TeX:] $$\begin{equation} \beta \end{equation}$$ represents the bias terms added to the weighted sum of inputs, allowing the model to adjust its output independently of the input. Together, [TeX:] $$\begin{equation} W \end{equation}$$ and [TeX:] $$\begin{equation} \beta \end{equation}$$ help the model optimize the noise-canceling signal by minimizing the loss function [TeX:] $$\begin{equation} \mathscr{L}=\operatorname{MSE}(s(t), \hat{s}(t))+\lambda\|W\|^2 \end{equation}$$, where MSE measures the difference be- tween the generated anti-noise signal [TeX:] $$\begin{equation} \hat{s}(t) \end{equation}$$, while [TeX:] $$\begin{equation} \lambda\|W\|^2 \end{equation}$$ acts as a regularization term to prevent overfitting. In the real-time noise cancellation phase, the trained model processes new input signals [TeX:] $$\begin{equation} r(t) \end{equation}$$ to generate the anti-noise output [TeX:] $$\begin{equation} \hat{s}(t) \end{equation}$$, which is then combined to ach- ieve destructive interference and reduce the overall noise level. 2.3 Spectrogram VisualizationTo facilitate feature extraction for noise cancella- tion, the input audio signal [TeX:] $$\begin{equation} s(t) \end{equation}$$ is transformed into a timefrequency representation using the Short-Time Fourier Transform (STFT) which is expressed as:

(1)[TeX:] $$\begin{equation} X(f, t)=\operatorname{STFT}\{s(t)\}(f, t)=\int s(\tau) w(\tau-t) e^{-j 2 \pi f \tau} d \tau \end{equation}$$where [TeX:] $$\begin{equation} X(f,t) \end{equation}$$ represents the spectrogram, fis the frequency variable, tdenotes time, [TeX:] $$\begin{equation} s(\tau) \end{equation}$$ is the original audio signal, and [TeX:] $$\begin{equation} \omega(\tau-t) \end{equation}$$ is a window function that localizes the Fourier transform within a specific time frame. The STFT enables the decomposition of [TeX:] $$\begin{equation} s(t) \end{equation}$$ into a sequence of time-dependent frequency com- ponents, providing a comprehensive view of how the signal’s frequency characteristics evolve over time. Magnitude spectrogram [TeX:] $$\begin{equation} |X(f, t)| \end{equation}$$ is computed as:

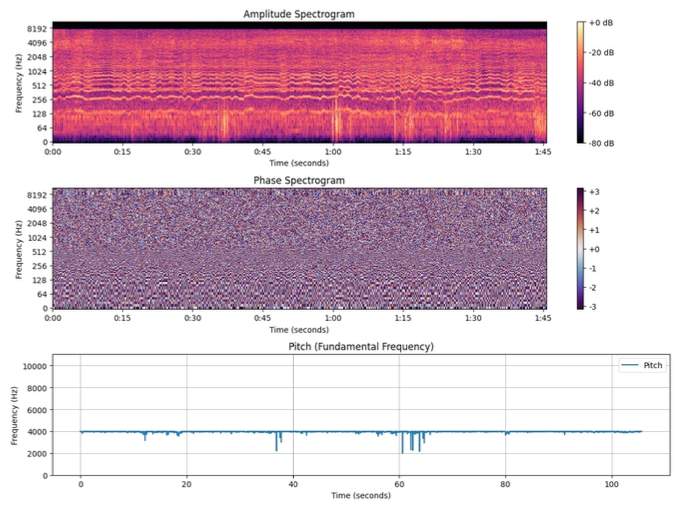

(2)[TeX:] $$\begin{equation} |X(f, t)|=\sqrt{\operatorname{Re}(X(f, t))^2+\operatorname{Im}(X(f, t))^2} \end{equation}$$where [TeX:] $$\begin{equation} \operatorname{Re}(X(f, t)) \end{equation}$$ and [TeX:] $$\begin{equation} \operatorname{Im}(X(f, t)) \end{equation}$$ represent the real and imaginary parts of [TeX:] $$\begin{equation} X(f,t) \end{equation}$$, respectively. This magni- tude spectrogram provides the amplitude of each fre- quency component, allowing the model to focus on prominent noise frequencies and their variations over time, which is crucial for generating effective anti- noise signals. Time-frequency representation is crucial for identi- fying key noise features associated with the UAV’s propeller sounds. By visualizing the spectrogram, it becomes easier to analyze the dominant frequency bands and transient patterns that characterize the noise[26], especially for propeller-driven UAVs, which exhibit repetitive spectral patterns. The CNN model subsequently processes these spectrograms, leveraging the temporal and spectral data to enhance the accuracy of anti-noise generation. Furthermore, parameters such as the window func- tion [TeX:] $$\begin{equation} \omega(\tau-t) \end{equation}$$ and the window length influence the reso- lution of the spectrogram. A shorter window length provides higher temporal resolution, allowing for pre- cise tracking of rapid changes in noise, while a longer window length improves frequency resolution[27], which is beneficial for capturing consistent noise pat- terns over time. These parameters are selected based on the specific characteristics of the UAV noise, en- suring that the spectrogram accurately represents both the time and frequency domains of the input signal. This allows the model to capture crucial features in both stable and dynamic noise environments. 2.4 Layered Architecture for Feature ExtractionThe proposed system leverages a series of Convolutional, Pooling, and Fully Connected layers to process the spectrogram [TeX:] $$\begin{equation} X(f,t) \end{equation}$$ of the UAV pro- peller noise signal, extracting and refining features es- sential for noise cancellation. The Convolutional Layer applies learnable filters W c across the spectro- gram, performing a convolution operation at each po- sition [TeX:] $$\begin{equation} (i,j) \end{equation}$$ to produce output [TeX:] $$\begin{equation} h_c(i,j) \end{equation}$$ based on local frequency and time patterns. This operation is defined as:

(3)[TeX:] $$\begin{equation} h_c(i, j)=\sum_{m=0}^{M-1} \sum_{n=0}^{N-1} W_c(m, n) \cdot X(i+m, j+n)+b_c \end{equation}$$where [TeX:] $$\begin{equation} M \end{equation}$$ and [TeX:] $$\begin{equation} N \end{equation}$$ denote the filter dimensions. These filters capture crucial noise characteristics, such as prominent frequency peaks and temporal variations tied to propeller noise. Following this, the Pooling Layer reduces the spatial dimensions[28] of the feature maps, capturing only the most prominent features from each region by taking the maximum value within a pooling window, represented by

This layer helps the model prioritize significant noise components and reduces the impact of minor fluctua- tions, thus enhancing the ANC system’s efficiency. Finally, the Fully Connected Layer flattens the output into a single feature vector [TeX:] $$\begin{equation} \mathbf{Z} \end{equation}$$ and computes a linear combination[28] of these features, transforming them into the anti-noise signal [TeX:] $$\begin{equation} \hat{s}(t) \end{equation}$$. This transformation is represented by:

(5)[TeX:] $$\begin{equation} h_{f c}=\sigma\left(\sum_{k=1}^K w_k z_k+b_{f c}\right), \end{equation}$$where [TeX:] $$\begin{equation} w_k \end{equation}$$ and [TeX:] $$\begin{equation} b_{f c} \end{equation}$$ are the layer’s weights and biases, and [TeX:] $$\begin{equation} \sigma \end{equation}$$ is an activation function. This layer integrates the extracted features, mapping them to an anti-noise signal that mirrors the UAV noise in amplitude but is precisely out of phase, thus enabling effective noise cancellation through destructive interference. 2.5 Generating Anti-Noise Sound SignalOnce the features have been extracted from the UAV propeller noise spectrogram and their inverse values calculated, the system generates an anti-noise signal [TeX:] $$\begin{equation} \hat{s}(t) \end{equation}$$ designed to mirror the characteristics of the primary noise [TeX:] $$\begin{equation} s(t) \end{equation}$$ but with an opposite phase. The extracted features-including time-domain character- istics, frequency components, amplitude, and phasea- long with their respective inverse values, are com- bined into a final feature vector that serves as input for the anti-noise generation function. Using this vec- tor of inverse features, anti-noise signal [TeX:] $$\begin{equation} \hat{s}(t) \end{equation}$$ is for- mulated as:

(6)[TeX:] $$\begin{equation} \hat{s}(t)=A^{-1} \cos \left(\omega t+\Phi^{-1}\right) \end{equation}$$where [TeX:] $$\begin{equation} A^{-1} \end{equation}$$ and [TeX:] $$\begin{equation} \Phi^{-1} \end{equation}$$ represent the inverse amplitude and phase components of the primary noise, re- spectively, while [TeX:] $$\begin{equation} \omega \end{equation}$$ is the angular frequency of the noise, which determines the rate of oscillation of both the primary noise and anti-noise signals. Equation 6 ensures that [TeX:] $$\begin{equation} \hat{s}(t) \end{equation}$$ is aligned in both magnitude and phase opposition to [TeX:] $$\begin{equation} s(t) \end{equation}$$. To achieve perfect cancel- ation, both the inverse amplitude [TeX:] $$\begin{equation} A^{-1} \end{equation}$$ and inverse phase [TeX:] $$\begin{equation} \Phi^{-1} \end{equation}$$ must be precisely synchronized and trans- mitted to overlap with the primary sound [TeX:] $$\begin{equation} s(t) \end{equation}$$ at the exact same time slot, as illustrated in Fig. 3. The an- ti-noise signal is further refined by minimizing the Mean Squared Error (MSE) loss between [TeX:] $$\begin{equation} \hat{s}(t) \end{equation}$$ and the target anti-noise, ensuring precise phase alignment for effective noise cancellation. Ⅲ . ExperimentThe experimentation phase included preparing and pre-processing the dataset, followed by model training and testing. Simulations were first conducted to assess initial model performance in controlled environments, and the trained model was then integrated into the UAV setup for real-time testing. These evaluations, detailed in the following subsections, confirm the model’s noise cancellation capabilities in both simu- lated and physical environments. 3.1 Dataset Acquisition and PreprocessingAn extensive dataset, comprising over 2200+ audio recordings, were carefully curated from two primary sources: publicly available datasets obtained from reli- able internet repositories and custom recordings cap- tured in controlled environments. The publicly sourced data provided a diverse range of propeller noise profiles under various operational conditions, while the custom recordings were specifically tailored to mimic real-world scenarios, including noise varia- tions introduced by different propeller types and speeds. Each recording underwent preprocessing to extract critical audio features such as frequency, pitch, phase, amplitude envelope, and temporal characteristics. These features were systematically stored in a structured CSV format to streamline further analysis and model training. The dataset was divided into 70% for training, 15% for validation, and 15% for testing, ensuring an even distribution across vari- ous noise profiles and conditions. This comprehensive approach ensured that the dataset effectively repre- sented diverse propeller noise characteristics, enabling the model to generalize well to new and unseen noise scenarios. 3.2 Model TrainingFor model training, each audio sample was con- verted into spectrogram representations using a frame size of 2048 samples and a hop length of 512 samples, with a sampling rate of 22050 Hz. These settings pro- vided a balanced time-frequency resolution to capture UAV noise characteristics effectively. The CNN ar- chitecture employed in this study consisted of multiple convolutional layers, each followed by max-pooling layers for efficient feature extraction. The convolu- tional layers used filters to detect spatial patterns with- in the spectrogram, leveraging cross-correlation to capture variations in UAV noise characteristics. Activation functions such as the Rectified Linear Unit (ReLU) were applied after each convolutional and fully connected layer to enhance the model’ s capacity for learning complex noise patterns. In the deeper lay- ers, the model learned intricate wave patterns charac- teristic of UAV propeller noise, while the fully con- nected layers generated noise cancellation signals from these extracted features. The model’ s final layer used a linear activation function to produce the ANC signal output. To optimize model performance, the mean squared error (MSE) loss function with a regularization term [TeX:] $$\begin{equation} \lambda=0.0001 \end{equation}$$ was employed to minimize the difference between the generated noise-canceling signal and the primary UAV noise. The loss function is defined as:

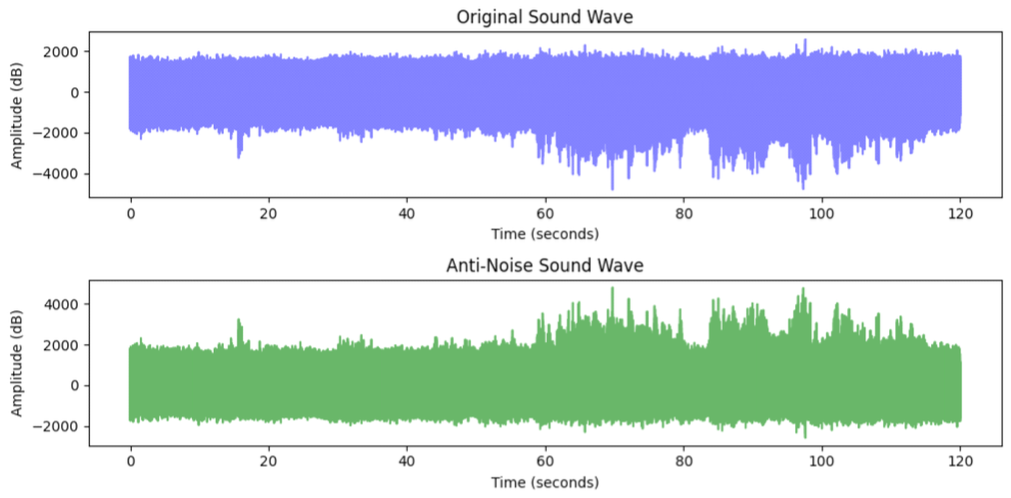

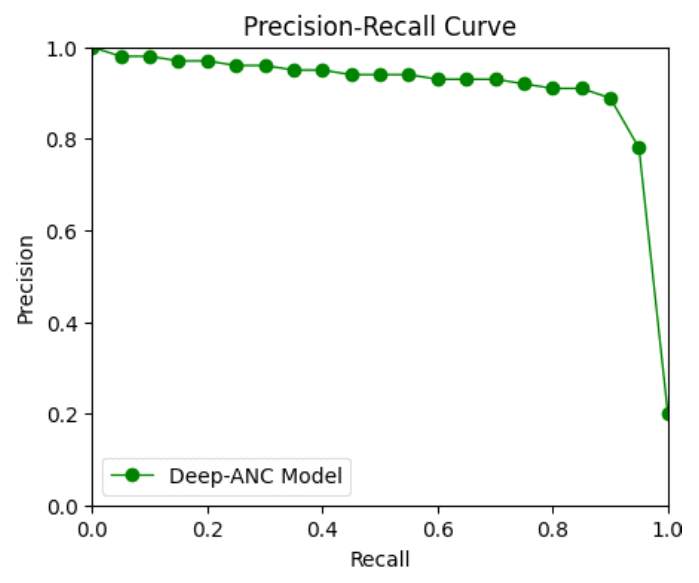

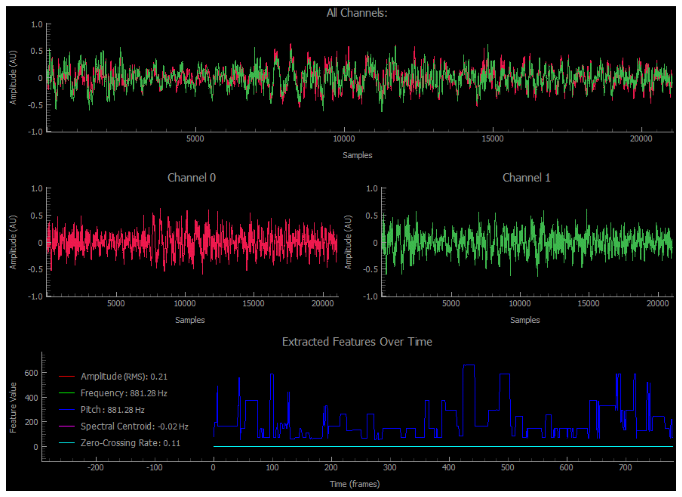

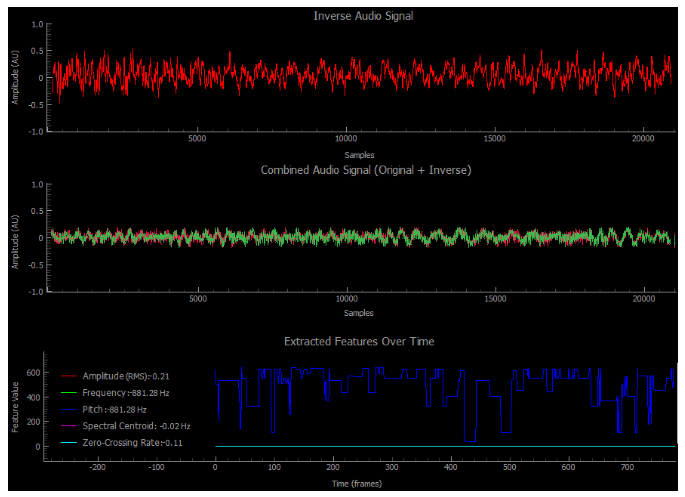

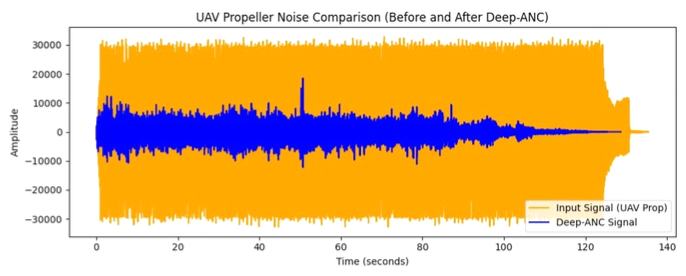

(7)[TeX:] $$\begin{equation} \mathscr{L}=\frac{1}{N} \sum_{i=1}^N\left(y_i-\hat{y}_i\right)^2+\lambda\|W\|^2 \end{equation}$$where [TeX:] $$\begin{equation} N \end{equation}$$ is the number of training samples, [TeX:] $$\begin{equation} y_i \end{equation}$$ denotes the primary UAV propeller audio at [TeX:] $$\begin{equation} i \end{equation}$$th sample which serves as the input for the model. The corresponding target [TeX:] $$\begin{equation} \hat{y}_i \end{equation}$$ , or the model’s ANC output, is generated to be the inverse of the primary audio, such that when combined with [TeX:] $$\begin{equation} y_i \end{equation}$$ , it results in a reduction of the origi- nal primary noise. To create [TeX:] $$\begin{equation} \hat{y}_i \end{equation}$$ , the model learns to extract temporal and spectral patterns from [TeX:] $$\begin{equation} y_i \end{equation}$$ and gen- erate the anti-noise signal through a series of convolu- tional layers. The labeling of the target [TeX:] $$\begin{equation} \hat{y}_i \end{equation}$$ is done by taking the original UAV propeller audio, processing it to obtain the exact inverse signal that will effec- tively cancel out the noise. This process ensures that during training, the model learns to minimize the error between the generated ANC output and primary noise signal, thereby effectively learning to cancel out the noise. In equation 7, [TeX:] $$\begin{equation} \lambda\|W\|^2 \end{equation}$$ represents the regulariza- tion term to control overfitting. The training utilized the Adam optimizer with a learning rate of 0.001, pro- ceeding for 75 epochs with a batch size of 32. Early stopping was implemented with patience of 5 epochs based on validation loss, ensuring that the model would generalize effectively across various noise scenarios. 3.3 EvaluationTo assess the performance of our deep learning model, rigorous evaluation was conducted, focusing on both quantitative metrics and visual performance indicators such as spectrograms. Once the model be- gan receiving real-time propeller noise as input, it generated corresponding spectrograms as shown in Fig. 4, suitable for visualizing and identifying UAV noise characteristics. The first plot in Fig. 4 represents the Amplitude Spectrogram of the incoming UAV propeller noise. In this plot, the x-axis shows time, while the y-axis represents frequency. The color in- tensity corresponds to the amplitude of the frequency components at each time point. Brighter regions in- dicate higher amplitude, while darker regions indicate lower amplitude. This spectrogram provides insight into the energy distribution of the noise signal across time and frequency. For UAV propeller noise, prominent fre- quency bands are observed, particularly in the low-fre- quency range (below 100 Hz) and high-frequency range (between 1-10 kHz). For example, at around 60 seconds, a peak in the amplitude is observed at approximately 2000 Hz, indicating a strong high-fre- quency component associated with the UAV’ s pro- peller dynamics. Similarly, at around 37 seconds, the amplitude peaks around 500 Hz, where low- er-frequency vibrations are most dominant. These fre- quency bands are significant as they provide insights into the primary sources of noise, which the model uses to focus its cancellation efforts. The second plot shows the Phase Spectrogram of the incoming pro- peller noise. The x and y-axis represent time and fre- quency respectively. However, in this plot, the color intensity is related to the phase of the frequency com- ponents rather than their amplitude. Phase represents the timing relationship between the different fre- quency components, which is essential for under- standing the signal’ s waveform structure. In relation to UAV propeller noise, phase spectro- gram reveals the phase shifts that occur across the frequency components. This information is crucial for the deep learning model in generating accurate an- ti-noise signals. While phase alone may not provide information about the energy or loudness of the noise, it is vital for synchronizing the cancellation signal to ensure effective destructive interference. A clear alignment of phase information between the original noise and the generated anti-noise signal can lead to more efficient noise cancellation. The third plot in Fig. 4 illustrates the Pitch Spectrogram or Fundamental Frequency of the incoming propeller noise. The pitch corresponds to the perceived frequency of the sound, primarily related to the fundamental frequency of the noise signal. At around 60 seconds, the pitch spectrogram shows a strong peak around 2000 Hz, which is indicative of the fundamental frequency associated with the UAV’ s propeller noise. This periodic pattern is char- acteristic of a constant rotational speed or a slight fluctuation in the propeller’s rotation, which is peri- odic in nature. Such observations help the deep learn- ing model identify and cancel repetitive elements of the noise. The model extracted critical features from those spectrograms, such as temporal frequency varia- tions, amplitude, and phase shifts, enabling it to gen- erate precise anti-noise signals that aligned with the primary noise. Through this alignment, the destructive interference phenomenon helped significantly in re- ducing the UAV noise. The performance metrics further underscore the model’s capability, achieving an impressive accuracy of 94.5%, alongside precision of 93.2%, recall of 96.1%, and an F1-score of 94.6%, as illustrated in Fig. 5. The achieved accuracy indicates a high level of reliability in correctly identifying noise events, sug- gesting that the model performs consistently across various noise scenarios. With 93.2% precision, the model successfully minimizes false positives, ensuring that noise events are rarely misclassified. High recall demonstrates the model’s sensitivity to noise, ensuring that it detects nearly all noise events. This is partic- ularly critical for real-world noise cancellation appli- cations, where even subtle noise occurrences need to be captured. Furthermore, the F1-score reflects a well-balanced performance, combining high precision and recall. This indicates that model strikes an optimal balance between minimizing false positives and ensur- ing comprehensive noise detection. Finally, the loss value of 0.115 shows that the model generates anti- noise signals that closely match the expected output, reinforcing the effectiveness of the noise cancellation process. These high scores highlight the model’ s ac- curacy and responsiveness, emphasizing its potential for real-world applications. Additionally, Fig. 6 illus- trates the amplitude reduction in UAV propeller noise before and after processing, demonstrating the tangi- ble effect of the model’s noise cancellation. The mod- el achieved a 36 dB reduction in UAV propeller noise, decreasing the noise level from 85 dB (pre-processed) to 49 dB (post-processed). This significant reduction corresponds to a perceptually noticeable decrease, re- inforcing the model’s effectiveness in noise cancellation. Fig. 6. Waveform comparisons for Input signal (UAV Propeller Noise) with Audio Signal Provided by Deep-ANC model.  3.4 Hardware ImplementationTo further validate our approach, we implemented real-time UAV noise cancellation using carefully se- lected hardware and configurations, allowing us to an- alyze the model’s effectiveness in realistic operational conditions. This practical implementation showcased the model’ s capability to mitigate propeller noise ef- fectively during flights. Our setup included a quad- copter UAV equipped with an Nvidia Jetson NX for onboard processing, high-quality microphones (ReS- peaker Mic Array v2.0) for precise noise capturing from all directions, audio interface cards for sound signal transmission, and strategically placed speakers. The hardware setup of the UAV used for experimental validation is illustrated in Fig. 7. This hardware se- lection was meticulously curated to enable efficient real-time noise reduction, ensuring that components were not only compatible with each other but also with our proposed model for active noise cancellation as well. Fig. 7. UAV hardware setup for testing the Active Noise Cancellation (ANC) system: The platform includes an Nvidia Jetson NX for real-time processing, ReSpeaker Mic Array for noise capture, and speakers for anti-no  The UAV setup process involved precisely integrat- ing the microphones and speakers onto the drone plat- form, ensuring strategic placement to maximize pro- peller noise capture and efficient anti-noise transmission. The CNN-based ANC model was de- ployed on the Jetson NX, alongside essential audio processing libraries and dependencies, enabling seam- less real-time audio analysis and anti-noise generation. To assess the ANC model’ s impact, we conducted both indoor and outdoor UAV flight tests to evaluate the system’ s effectiveness in different environments. Initially, we performed standard UAV flights without activating the ANC system, resulting in high noise levels due to the propeller operation. In the subsequent tests, we activated the ANC model and assessed its noisecanceling performance. During these flights, the propeller noise was captured by microphones and transmitted to the Jetson NX board via the audio inter- face cards. The model then processed this input to generate anti-noise signals, which were emitted through the speakers positioned towards each propeller. The out door flight test results, shown in Fig. 8, demonstrate the practical efficacy of the pro- posed system in real-world conditions. Fig. 9 illus- trates the waveform plots of the received UAV pro- peller noise signal, which is then split across different channels, along with the extracted features. Fig. 10 shows the inverse noise signal generated by the mod- el, and the combined effect of the primary and inverse signals, highlighting the significant noise reduction achieved through destructive interference in outdoor flight scenarios. This setup confirmed that the deep learning-based ANC system effectively reduces noise, facilitating quieter flights and proving its feasibility for real-world UAV applications. Table 1 presents a comparative analysis between traditional ANC ap- proaches and our proposed Deep-ANC model. As shown, the proposed Deep-ANC model outperforms the other approaches in terms of accuracy, noise re- duction, reaction time and overall performance. Table 1. Comparison of Traditional ANC Models and the Proposed Deep-ANC Model

Ⅳ . ConclusionThis study proposed a deep learning-based CNN model for active noise cancellation (ANC) of UAV propeller noise, demonstrating high effectiveness in noise reduction by leveraging CNN capabilities. The model underwent comprehensive training, testing, and validation, achieving impressive performance metrics: an accuracy of 94.5%, a precision of 93.2%, and a recall of 96.1%. The noise reduction results were vali- dated across various flight scenarios, with the success- ful hardware implementation highlighting the model’ s capacity to perform in dynamic operational settings. Overall the proposed system was able to achieve a remarkable 36 dB reduction in UAV propeller noise. This study underscores the potential of deep learning approaches for tackling complex noise challenges in UAV operations, offering a promising foundation for future noise mitigation strategies. Future work will focus on enhancing the system’ s efficiency and exploring much broader range of UAV mobility scenarios. Practical implementation in diverse conditions will serve as key performance in- dicators (KPIs) for evaluating the system’ s robustness and adaptability. By expanding the range of scenarios and refining the ANC model, we aim to further im- prove noise cancellation effectiveness and operational efficiency in real-world applications. BiographyFaisal Ayub KhanJul. 2021: BS(CS). degree, School of Computer Sci- ence and Computer Engineer- ing, HITEC University Mar. 2023:MS. degree, School of IT Convergence and Engineering, Kumoh Natio- nal Institute of Technology [Research Interest] Artificial Intelligence, Machine Learning, Computer Vision, 5G/6G Wireless Com- munication Network Systems [ORCID:0009-0002-1558-1453] BiographySoo Young ShinFeb. 1999: B.Eng. degree, School of Electrical and Electronic Engineering, Seoul National University Feb. 2001: M.Eng. degree, School of Electrical, Seoul National University Feb. 2006: Ph.D. degree, School of Electrical Engineering and Computer Science, Seoul National University Sept. 2010-Current:Professor, School of IT Conver- gence and Engineering, Kumoh National Institute of Technology [Research Interest] 5G/6G Wireless Communica- tions and Networks, Signal Processing, Internet of Things, Mixed Reality, and Drone Applications. [ORCID:0000-0002-2526-2395] References

|

StatisticsCite this articleIEEE StyleF. A. Khan and S. Y. Shin, "Suppressing the Acoustic Effects of UAV Propellers through Deep Learning-Based Active Noise Cancellation," The Journal of Korean Institute of Communications and Information Sciences, vol. 50, no. 4, pp. 535-548, 2025. DOI: 10.7840/kics.2025.50.4.535.

ACM Style Faisal Ayub Khan and Soo Young Shin. 2025. Suppressing the Acoustic Effects of UAV Propellers through Deep Learning-Based Active Noise Cancellation. The Journal of Korean Institute of Communications and Information Sciences, 50, 4, (2025), 535-548. DOI: 10.7840/kics.2025.50.4.535.

KICS Style Faisal Ayub Khan and Soo Young Shin, "Suppressing the Acoustic Effects of UAV Propellers through Deep Learning-Based Active Noise Cancellation," The Journal of Korean Institute of Communications and Information Sciences, vol. 50, no. 4, pp. 535-548, 4. 2025. (https://doi.org/10.7840/kics.2025.50.4.535)

|